A couple of weeks ago I spent a day with a Chemical manufacturing company, working with their business improvement (BI) community, 50 miles south of Milan. The welcome was very warm, but the fog was dense and cold as we donned hard hats and safety shoes for a tour of the site.

A couple of weeks ago I spent a day with a Chemical manufacturing company, working with their business improvement (BI) community, 50 miles south of Milan. The welcome was very warm, but the fog was dense and cold as we donned hard hats and safety shoes for a tour of the site.

One of the key measures which the BI specialists monitor is that of Overall Equipment Effectiveness – which is defined as:

OEE = Availability x Performance x Quality.

Availability relates to production losses due to downtime; Performance relates to the production time relative to the planned cycle time, and Quality relates to the number of defects in the final product.

It set me thinking about what a measure of Overall Knowledge Effectiveness for a specific topic might look like?

How do we measure the availability of knowledge? Is that about access to information, or people’s availability for a conversation?

What about knowledge performance? Hmmm. This is where a linear industrial model for operational performance and cycle times begins to jar against the non-linear world of sharing, learning, adapting, testing, innovating...

And knowledge quality? How do we measure that? It is about the relevance? The degree to which supply satisfies demand? The way the knowledge is presented to maximise re-use? The opportunity to loop-back and refine the question with someone in real-time to get deeper into the issue?

Modelling how people develop and use knowledge is so much more complicated than manufacturing processes. Knowledge isn't as readily managed as equipment!

If we limit ourselves to the “known” and “knowable” side of the Cynefin framework – the domains of “best practice” and “good practice” - are there some sensible variables which influence overall knowledge effectiveness for a specific topic or theme?

So how about:

Overall Knowledge Effectiveness = Currency x Depth x Availability x Usability x Personality

Currency: How regularly the knowledge and any associated content is refreshed and verified as accurate and relevant.

Depth: Does it leave me with unanswered questions and frustration, or can I find my way quickly to detail and examples, templates, case studies, videos etc.?

Availability: How many barriers stand between me and immediate access to the knowledge I need. If it’s written down, than these could be security/access barriers; if it’s still embodied in a person, then it’s about how easily I can interact with them.

Usability: How well has this been packaged and structured to ensure that it’s easy to navigate, discover and make sense of the key messages. We’ve all read lessons learned reports which are almost impossible to draw anything meaningful from because it’s impossible to separate the signal from the noise.

Personality: I started with “Humanness”, but that feels like a clumsy term. I like the idea that knowledge is most effective when it has vitality and personality. So this is a measure of how quickly can I get to the person, or people with expertise and experience in this area in order to have a conversation. To what extent are they signposted from the content and involved in its renewal and currency (above)

Pauses for thought.

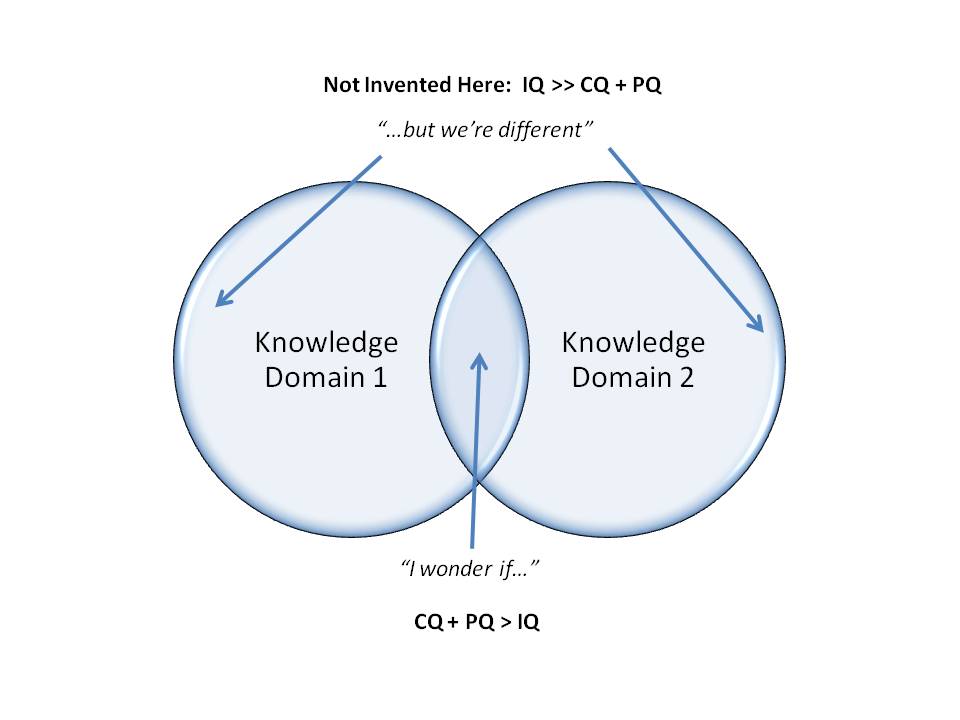

Hmmm. It still feels a bit like an "if you build it they will come" supply model. Of course people still need to provide the demand - to be willing and motivated to overcome not-invented-here and various other behavioural syndromes and barriers, apply the knowledge and implement any changes.

Perhaps what I've been exploring is really "knowledge supply effectiveness" there's a "knowledge demand effectiveness" equation which needs to be balanced with this one?

Hence: Overall Knowledge Effectiveness is maximised when

(Knowledge Supply Effectiveness) / (Knowlege Demand Effectiveness) = 1

Not sure whether the fog is lifting or not. More thinking to be done...

Our BMW is nearly 8 years old now. It’s been brilliant, and it’s had to put up with a lot from a growing family, and now a dog with an affinity for mud and water.

Our BMW is nearly 8 years old now. It’s been brilliant, and it’s had to put up with a lot from a growing family, and now a dog with an affinity for mud and water.